Clues to deepfakes may be in the eyes.

Researchers at the University of Hull in England reported July 15 that eye reflections offer a potential way to suss out AI-generated images of people. The approach relies on a technique also used by astronomers to study galaxies.

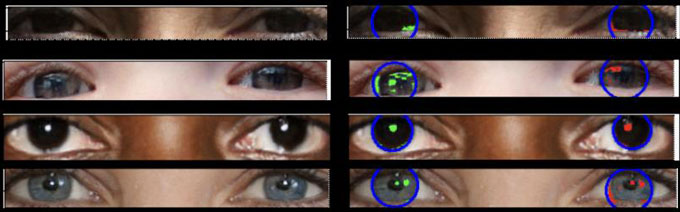

In real images, light reflections in the eyeballs match up, showing, for instance, the same number of windows or ceiling lights. But in fake images, there’s often an inconsistency in the reflections. “The physics is incorrect,” says Kevin Pimbblet, an observational astronomer who worked on the research with then–graduate student Adejumoke Owolabi and presented the findings at the Royal Astronomical Society’s National Astronomy Meeting in Hull.

To carry out the comparisons, the team first used a computer program to detect the reflections and then used those reflections’ pixel values, which represent the intensity of light at a given pixel, to calculate what’s called the Gini index. Astronomers use the Gini index, originally developed to measure wealth inequality in a society, to understand how light is distributed across an image of a galaxy. If one pixel has all the light, the index is 1; if the light is evenly distributed across pixels, the index is 0. This quantification helps astronomers classify galaxies into categories such as spiral or elliptical.

In the current work, the difference in the Gini indices between the left and right eyeballs is the clue to the image’s authenticity. For about 70 percent of the fake images the researchers examined, this difference was much greater than the difference for real images. In real images, there tended to be no, or close to no, difference.

“We can’t say that a particular value corresponds to fakery, but we can say it’s indicative of there being an issue, and perhaps a human being should have a closer look,” Pimbblet says.

He emphasizes that the technique, which could also work on videos, is no silver bullet for detecting fakery (SN: 8/14/18). A real image can look like a fake, for example, if the person is blinking or if they are so close to the light source that only one eye shows the reflection. But the technique could be a part of a battery of tests — at least until AI learns to get reflections right.