It’s a bright day for computing — literally.

Two tech companies have unveiled computer components that use laser light to process information. These futuristic processors could soon solve specific real-world problems faster and with lower energy requirements than conventional computers. The announcements, published separately April 9 in Nature, mark a major leap forward for this alternative approach to computing.

Lightelligence, based in Boston, and Lightmatter, in Mountain View, Calif., have shown that light-based, or photonic, components “can do things that we care about, and that they can do them better than electronic chips that we already have,” says Anthony Rizzo, a photonics engineer at Dartmouth College who was not involved in either study.

Lasers already zap data across the world via fiber optic cables, and photonics plays a role in moving data in advanced data centers. In March, for example, tech company NVIDIA, based in Santa Clara, Calif., announced new technology that uses light to communicate between devices. But, Rizzo says, these light beams don’t compute anything. Inside a conventional computer, incoming light signals are transformed into slower electronic 1s and 0s that move through tiny transistors.

In contrast, light inside the Lightmatter and Lightelligence devices “is actually doing math,” Rizzo says. Specifically, both use light to perform matrix multiplication, a fundamental operation in most AI processing as well as other areas of computing. In both new devices, all other calculations occur in electronic components.

The timing of these new developments is crucial. AI models are growing in size and complexity, while the progress of traditional chips is slowing. Historically, the number of transistors that engineers could squeeze onto chips would roughly double every two years, a trend known as Moore’s law. Tinier transistors meant faster, cheaper computing.

But Moore’s law has reached its limit, says Nick Harris, founder and CEO of Lightmatter. Other experts agree. The physics of how electricity moves through transistors prevents them from shrinking much further. Computers based on regular electronic chips “are not going to be getting better,” Harris says. Photonic computing offers a potential solution.

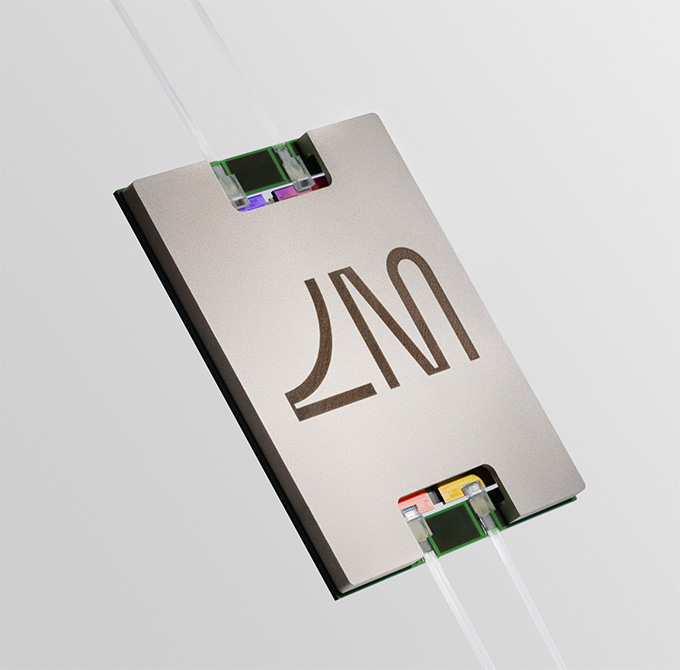

The Lightelligence device, named PACE, combines a photonic and electronic chip to speed up computation for optimization problems, which are crucial for industries such as finance, manufacturing and shipping. The Lightmatter device, on the other hand, is a more general-purpose processor that integrates four light-based and two electronic chips. The team used this system to run mainstream AI technology, including large language models like those behind ChatGPT or Claude. They also ran a deep learning algorithm that practiced playing Atari games, including Pac-Man.

“That’s never been done” using any sort of alternative computer processing technology, Harris says. Engineers had previously built experimental photonic processors that could do math, but these never came close to matching a regular computer’s performance on real-world computing problems.

One big problem with experimental photonic processors has been accuracy. Light signals can take on a vast range of values instead of representing just 1 and 0. If one of these values isn’t transmitted correctly, a tiny mistake could compound into a big error in calculations.

In the optimization problems Lightelligence tested, some randomness can be a good thing. It helps the system explore solutions more efficiently, the company said in a statement. Lightmatter addresses this issue by stacking electronic chips atop its photonic ones to carefully control the incoming and outgoing data, thus reducing errors.

Their new processor “is not a lab prototype,” Harris says. “This is a new type of computer. And it’s here.”

The photonic components for both devices can be manufactured using the same factories and processes that already produce electronic chips, Rizzo says. So the technology will scale easily. “These could be in real systems very soon,” he says, adding that the technologies could show up in data centers within five years.