University of Waterloo student Rastin Rassoli’s own struggles with his mental health inspired him to combine two of his passions — computer science and psychology — to help more young people access care and support. At an early co-op placement, for instance, he helped develop an app called Joyi, which delivers bite-sized psychology lessons to help teens manage stress and anxiety.

A new idea emerged, however, as he heard first-hand accounts about difficulties fellow students had accessing care on campus and began learning about the subclinical stage of mental health disorders in his classes. At the same time, he saw stunning leaps being made with large language model artificial intelligence systems like ChatGPT.

Those elements coalesced in Rassoli’s latest creation: Doro, an app that aims to coach students in tackling their mental health concerns early on, before symptoms escalate.

“LLMs can make things very accessible. And in the mental health industry, we really have this problem of accessibility,'” he said.

About one in five Canadian youth who reported having “good,” “very good” or “excellent” mental health in 2019 downgraded that status to “fair” or “poor” by 2023, Statistics Canada said this week. People aged 15 to 24 are already more likely to experience mental illness and/or substance use disorders than any other age group.

As young people struggle to find care, a new wave of AI mental health and wellness apps have emerged of late, offering support at one’s fingertips, 24/7. Yet experts warn that an app can’t replace conventional treatment, especially in serious or emergency situations.

What kind of apps are out there?

The general idea behind these apps is for users to type out messages sharing their concerns, as if “chatting” to a real-life therapist.

The technology can draw from a database of knowledge, reference what a person has shared or discussed across multiple past sessions and deliver responses in a convincingly conversational way. An app might encourage further reflection, for instance, or lead a user through guided meditation. Another could prompt you with some cognitive behavioural therapy techniques.

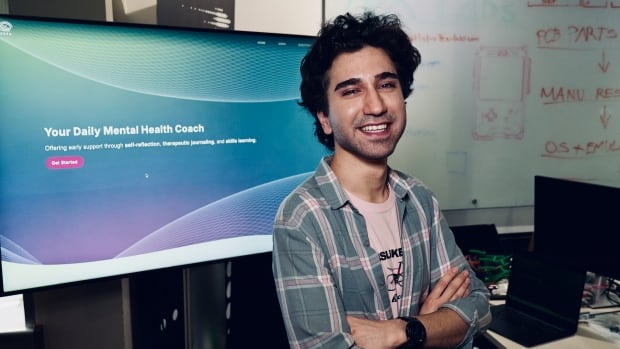

University student Rastin Rassoli gives CBC News a brief demo of Doro, his app using chatbot technology to coach students on tackling mental health concerns at an early stage.

Rassoli envisions Doro as an early intervention tool for youth starting to experience challenges: maybe someone struggling with disrupted sleep, or a person anxious over an upcoming school presentation. Perhaps this student got waitlisted when seeking help on campus, or didn’t reach out in the first place, fearing stigma.

“We try to identify these specific things — the specific risk factors, behaviours and symptoms [shared by the user] — and focus on each of these,” he said.

Then, he says, Doro pulls from a variety of techniques to suggest how to manage those symptoms and offers small tasks for improvement, while also tracking each session. It might suggest journalling, for instance, or TIPP, a method for managing panic attacks.

Can it replace a human therapist?

If a user’s described symptoms cross a certain threshold into a crisis or emergency, however, Doro immediately refers them to a real person.

“For people with serious disorders, we immediately stop the chat. We ask them to please use the helpline or one of the available therapists in your region,” he said.

New mental health apps using AI chatbot technology promise more access to mental health support for young people, but Rachel Katz, who is researching ethical issues of using AI for psychotherapy, questions whether users truly get the help they need.

For those who’ve never seen a mental health professional before, an AI app might seem like an easy starting point, given that it’s indeed tough to share mental health struggles, noted Rachel Katz, a PhD candidate researching ethical issues of using AI for psychotherapy.

“My concern though is that these [apps] don’t actually effectively bridge that gap. It sort of reinforces the isolation of a mental health struggle,” said Katz, who is based at the University of Toronto’s Institute for the History and Philosophy of Science and Technology.

“It reinforces this hyper-independence and kind of further entrenches people in this inability to reach out and actually talk to a person.”

How could these apps benefit young people?

According to the World Health Organization, close to one billion people worldwide — including 14 per cent of the world’s adolescents — suffer from a mental disorder, with anxiety and depression among the most common. Most mental health professionals focus on adults; there aren’t enough serving young people, according to Eduardo Bunge, a psychology professor at Palo Alto University in California, who first began exploring AI chatbots in the mental health space about six years ago.

Even if students do connect to support, help isn’t available at all hours every day, added Bunge, who believes clinicians working alongside AI apps could help increase young people’s access to care.

Different mental health concerns require different interventions, says Palo Alto University Professor Eduardo Bunge, but if people find talking to a chatbot helpful, why shouldn’t professionals add that technology to their toolbox?

Mental health professionals can create and curate the knowledge bases feeding into these AI platforms and set limits for them, and should also conduct ongoing monitoring of the user interactions, he said, which could help ease many people’s concerns.

A chatbot “is like a very good first line of treatment — that may not be sufficient [alone]… but it’s better than doing nothing. And it could be a perfect segue, if we use it carefully, to lead them to have better outcomes.”

Can you trust a chatbot?

There’s definite potential in the technology, says Dr. Michael Cheng from Ottawa children’s hospital CHEO. A chatbot could be used to easily point a young person toward specific mental health resources or information, for instance.

Still, he’s concerned about issues of safety, privacy and confidentiality, use of patient data and an overall lack of regulation at the moment.

“It’s a bit of a Wild West right now,” said the child and adolescent psychiatrist. “Anyone can just [release] an app and say it does whatever.”

Cheng is also wary of young people turning to an app rather than building healthy human connections, which are necessary for mental wellness.

“We are seeing an epidemic of children, youth and families who are disconnected from people.… They’re spending excessive time in front of screens.

“The challenge with generative AI is that it can be used to create experiences which are even more addictive.”

At this point, chatbots shouldn’t be used without supervision by human therapists, believes Katz, the medical ethicist. She pointed to high-profile instances of chatbots delivering problematic or harmful suggestions, like the case where a chatbot replacing an eating disorder hotline suggested dieting and weight loss to users.

What do students think?

Jason Birdi, who studies biotechnology at Waterloo, has tried chatbots for generating ideas, but he draws the line at other uses, including anything health-related.

“I don’t trust the system. I don’t know where that information is going,” he said.

Kaden Johnson, a University of Toronto Mississauga student, sees promise in them, since he knows some peers have a hard time opening up to another person and worry about being judged.

Yet new tools like this should be an option and not a replacement for traditional services or professionals, said the second-year political science student.

“Mental health specialists or [resources] where people can go to access those services shouldn’t be cut back as we roll out AI. I think they should continue to increase.”